- Published on

Model Context Protocol in Practice

- Authors

- Name

- Diego Carpintero

Introduction

Model Context Protocol (MCP) is an open protocol, developed by Anthropic, for connecting AI applications with external data sources, tools, and systems. The implications of this interoperability are significant, as this enables existing software platforms to seamlessly share contextual information while enhancing them with the capabilities of frontier models. For end users, this translates into more powerful, context-rich AI applications and workflows.

A solution to the MxN Integration Problem

In practice, MCP standardizes how AI applications connect to and work with external systems, similar to how APIs standardize web application interactions with backend servers, databases, and services. Before MCP, integrating AI applications with external systems was highly fragmented and required building numerous custom connectors and prompts, leading to an inefficient M×N problem where M AI applications would need unique integrations for N different tools.

By providing a common protocol, MCP transforms this challenge into a simpler M+N problem, promoting modularity and reducing complexity. With this approach AI developers can easily connect applications with any MCP server, whereas enterprises can benefit from a clear separation of concerns between AI product teams.

Growing Ecosystem

More than 10,000 MCP Server integrations including some of the most used software platforms (such as Google Drive, GitHub, AWS, Notion, PayPal and Zapier) have already been implemented.

Building a custom MCP Integration

In this guide, we dive into the MCP architecture and demonstrate how to implement a Python MCP Server and Client for the NewsAPI, allowing to automatically perform retrieval and analytics on news articles with LLMs.

Table of Contents

- MCP Architecture

- MCP Server Implementation

- MCP Client Implementation

- Inspector Tooling

- Demo in Claude Desktop

- References

MCP Architecture

MCP takes inspiration from the Language Server Protocol (LSP), an open standard developed by Microsoft in 2016 that defines a common way to enhance development tools (IDEs) with language specific tools such as code navigation, code analysis, and code intelligence.

At its core, MCP follows a client-server architecture using JSON-RPC 2.0 messages to establish communication between:

- Hosts: User-facing AI applications (like Claude Desktop, AI-enhanced IDEs, inference libraries, and Agents) that host MCP clients and orchestrate the overall flow between user requests, LLM processing, and external systems. They enforce security policies and handle user authorization.

- Servers: Lightweight services that expose specific context and capabilities via MCP primitives. They must respect security constraints.

- Clients: Components within the host application that manage 1:1 connections with specific MCP servers. Each Client handles the protocol-level details of MCP communication and acts as an intermediary between the Host's logic and the Server.

MCP Primitives

MCP servers expose capabilities through standardized interfaces:

- Tools (Model Controlled): Functions that a client can invoke to perform specific actions, similar to function calling (e.g. retrieving, searching, sending messages, interacting with a database) an AI model decides when to call them based on the user's intent.

- Resources (Application Controlled): Read-only, dynamic data comparable to read-only API endpoints (e.g. accessing file contents, database records, and configurations).

- Prompt-Templates (User Controlled): Pre-defined, evaluated abstractions for users' interactions and optimal use of tools and resources (e.g. prompt engineering for data analytics, common workflows, specialized task templates, guided interactions).

And optionally:

- Sampling: Server-initiated agentic behaviors and recursive LLM interactions.

Communication Protocol

MCP Clients and Servers exchange well-defined messages in JSON-RPC 2.0, a lightweight, language-agnostic remote procedure call protocol encoded in JSON. This standardization is critical for interoperability across the ecosystem:

The communication with clients is based primarily via two transports that handle the flow of messages between the client and server:

stdio (Standard Input/Output): Used when the Client and Server run on the same machines. The Host application launches the Server as a subprocess and communicates with it by writing to its standard input (stdin) and reading from its standard output (stdout). This is simple and effective for local integrations.

HTTP via SSE (Server-Sent Events): Used when the Client connects to the Server via HTTP. After an initial setup, the Server can push messages (events) to the Client over a stateful connection using the SSE standard.

Streamable HTTP: Updated version of the protocol for remote servers that supports both stateful and stateless deployments.

MCP Server Implementation

In this section, we implement an MCP Server for News Articles in Python using FastMCP. While this provides a convenient abstraction layer, you can alternatively implement the server at a lower level by directly defining and handling the JSON-RPC requests. This approach gives developers full control over the protocol details and allows to customize every aspect of the server, including lifecycle management. An example can be found in the MCP documentation for Advance-Usage.

Exposing Tools

Our server will expose fetch_news and fetch_headlines as tools using the @mcp.tool() decorator:

from mcp.server.fastmcp import FastMCP, Context

from newsapi.connector import NewsAPIConnector

from newsapi.models import NewsResponse

mcp = FastMCP("News Server")

news_api_connector = NewsAPIConnector()

@mcp.tool()

async def fetch_news(

query: str,

from_date: str | None,

to_date: str | None,

language: str = "en",

sort_by: str = "publishedAt",

page_size: int = 10,

page: int = 1,

ctx: Context = None,

) -> NewsResponse:

"""

Retrieves news articles.

Args:

query: Keywords or phrases to search for

from_date: Optional start date (YYYY-MM-DD)

to_date: Optional end date (YYYY-MM-DD)

language: Language code (default: en)

sort_by: Sort order (default: publishedAt, popularity, relevancy)

page_size: Number of results per page (default: 10, max: 100)

page: Page number for pagination (default: 1)

ctx: MCP Context

Returns:

News Articles

"""

if ctx:

ctx.info(f"Searching news for: {query}")

params = {

k: v

for k, v in {

"q": query,

"from": from_date,

"to": to_date,

"language": language,

"sortBy": sort_by,

"pageSize": min(page_size, 100),

"page": page,

}.items()

if v is not None

}

success, result = await news_api_connector.search_everything(**params)

if not success:

return error_response("Failed to fetch news articles.")

return result

@mcp.tool()

async def fetch_headlines(

category: str,

query: str,

country: str = "us",

page_size: int = 10,

page: int = 1,

ctx: Context = None,

) -> NewsResponse:

# See fetch_headlines @tool implementation in ./src/server.py

if __name__ == "__main__":

mcp.run(transport="stdio")

We have abstracted the functionality to handle connections to the NewsAPI within a Connector class using Pydantic models:

class NewsAPIConnector:

"""

Handles a connection with the NewsAPI and retrieves news articles.

"""

def __init__(self):

self.api_key = os.getenv("NEWSAPI_KEY")

self.base_url = os.getenv("NEWSAPI_URL")

async def get_client(self) -> httpx.AsyncClient:

return httpx.AsyncClient(timeout=30.0, headers={"X-Api-Key": self.api_key})

async def search_everything(

self, **kwargs

) -> Tuple[bool, Union[str, NewsResponse]]:

"""

Retrieves News Articles from the NewsAPI "/everything" endpoint.

Args:

**kwargs: Requests parameters (see https://newsapi.org/docs/endpoints/everything)

Returns:

A tuple containing (success, result) where:

- success: A boolean indicating if the request was successful

- result: Either an error message (string) or the validated NewsResponse model

"""

async with await self.get_client() as client:

params = kwargs

try:

response = await client.get(f"{self.base_url}everything", params=params)

response.raise_for_status()

data = response.json()

result = NewsResponse.model_validate(data)

return True, result

except httpx.RequestError as e:

return False, f"Request error: {str(e)}"

except Exception as e:

return False, f"Unexpected error: {str(e)}"

async def get_top_headlines(

self, **kwargs

) -> Tuple[bool, Union[str, NewsResponse]]:

# See get_top_headlines implementation in ./newsapi/connector.py

Pydantic models:

from datetime import datetime

from pydantic import BaseModel, ConfigDict, HttpUrl

from pydantic.alias_generators import to_camel

class ArticleSource(BaseModel):

model_config = ConfigDict(alias_generator=to_camel, populate_by_name=True)

id: str | None

name: str | None

class Article(BaseModel):

model_config = ConfigDict(alias_generator=to_camel, populate_by_name=True)

source: ArticleSource

author: str | None

title: str | None

description: str | None

url: HttpUrl | None

url_to_image: HttpUrl | None

published_at: datetime | None

content: str | None

class NewsResponse(BaseModel):

model_config = ConfigDict(alias_generator=to_camel, populate_by_name=True)

status: str

totalResults: int

articles: list[Article]

Exposing Resources

Resources allow our MCP server to retrieve read-only data to the client by specifying a URI and data format:

@mcp.resource("memo://newstopics/", mime_type="application/json")

def list_topics():

topics = [

"business",

"entertainment",

"general",

"health",

"science",

"sports",

"technology",

]

return topics

Exposing Prompts

Prompts are intended to define a set of advanced, optimized user and assistant messages that can be used by the client:

@mcp.prompt(

name:"topic-news-analysis",

description:"analyzes the news development and sentiment evolution in a certain topic during a certain period"

)

def analyze_topic(topic: str, from_date: str, to_date: str):

return f"""

# News Development & Sentiment Analysis: {topic}

## Analysis Parameters

- **Topic**: {topic}

- **Time Period**: {from_date} to {to_date}

## Instructions

You are a team of skilled researchers. Your task is to conduct a comprehensive analysis of news coverage and sentiment evolution for the specified topic during the given time period. Your analysis should include:

### 1. Timeline of Key Developments

- Identify major news events, announcements, or developments related to {topic}

- Create a chronological timeline of significant moments

- Note any catalysts that drove increased media attention

- Highlight turning points or inflection points in the narrative

### 2. Sentiment Evolution Analysis

- Track how overall sentiment (positive, negative, neutral) changed over time

- Identify specific events that caused sentiment shifts

- Analyze the intensity and duration of sentiment changes

## Output Format

Structure your response as follows:

**Executive Summary** (2-3 paragraphs)

Brief overview of the most significant findings

**Key Insights**

- 3-5 bullet points highlighting the most important discoveries

- Focus on actionable insights or surprising findings

**Visual Data Suggestions**

Provide a chart, graph, or visualization that would enhance understanding of the trends

**Methodology Notes**

Briefly explain your analytical approach and any limitations

## Analysis Guidelines

- Use specific examples and quotes to support your findings

- Quantify trends where possible (e.g., "sentiment shifted from 60% negative to 40% positive")

- Maintain objectivity while noting subjective interpretations

- Consider context from broader news environment and competing stories

- Address any data gaps or limitations in available coverage

- Cross-reference multiple sources to verify patterns

- Note any potential biases in source selection or coverage

Focus on providing actionable insights that help understand not just what happened, but why sentiment and coverage evolved as they did, and what this might indicate for future developments related to {topic}.

"""

Dynamic Discovery

MCP features dynamic capability discovery. When a client connects to a server, it can query the available functionality through three specific list methods:

tools/listresources/listprompts/listThis dynamic mechanism enables clients to automatically adapt to each server's specific capabilities without requiring a priori knowledge of the server's functionality.

MCP Client Implementation

from mcp import ClientSession, StdioServerParameters, types

from mcp.client.stdio import stdio_client

# Commands for running/connecting to MCP Server

server_params = StdioServerParameters(

command="python", # Executable

args=["server.py"], # Optional command line arguments

env=None, # Optional environment variables

)

async with stdio_client(server_params) as (read, write):

async with ClientSession(

read, write, sampling_callback=handle_sampling_message

) as session:

await session.initialize()

# List available prompts

prompts = await session.list_prompts()

# Get a prompt

prompt = await session.get_prompt(

"example-prompt", arguments={"arg1": "value"}

)

# List available resources

resources = await session.list_resources()

# List available tools

tools = await session.list_tools()

# Read a resource

content, mime_type = await session.read_resource("file://some/path")

# Call a tool

result = await session.call_tool("fetch_news", arguments={"q": "news topic or query"})

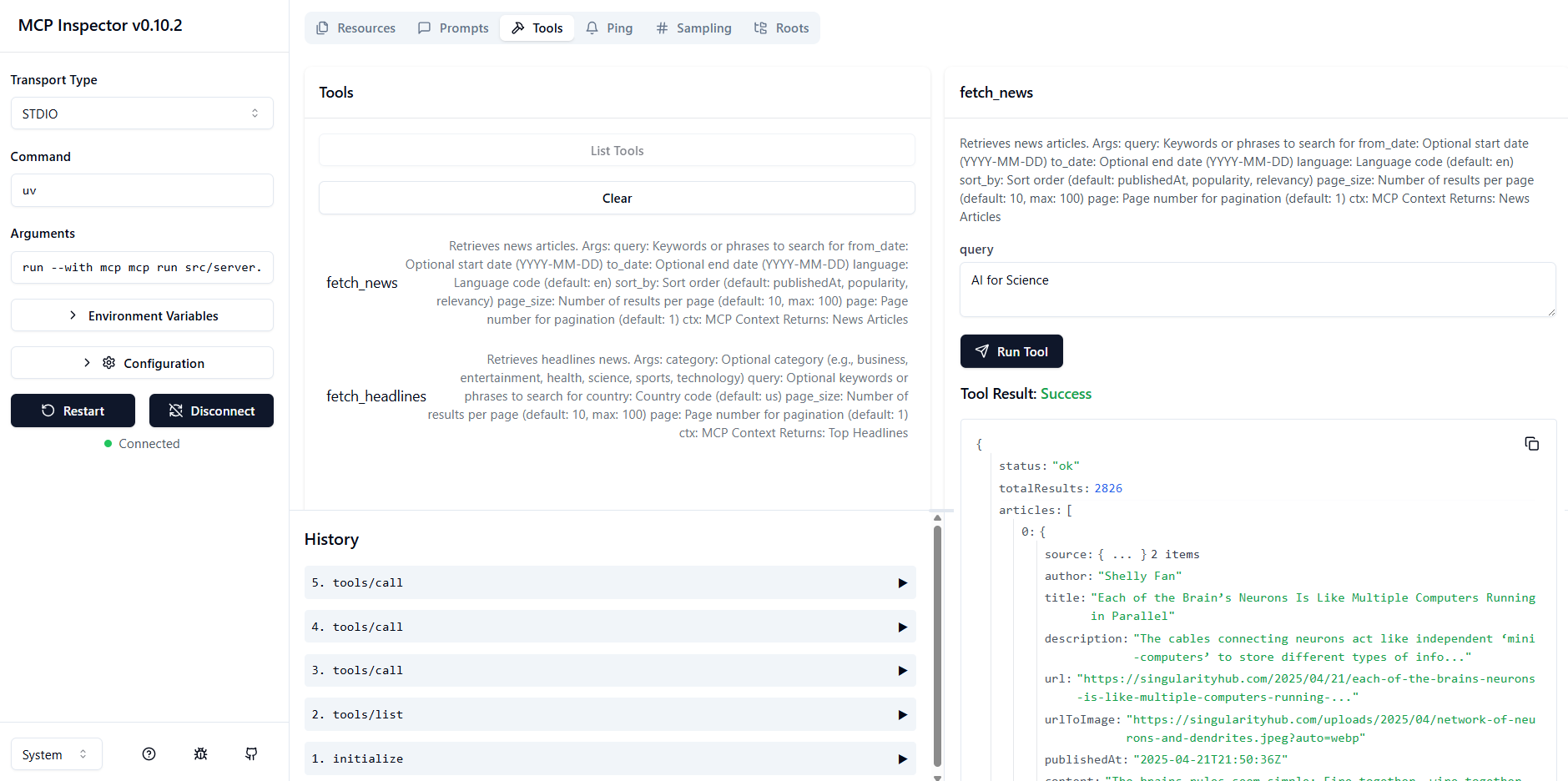

Inspector Tooling

Anthropic has developed Inspector, a browser-based tool designed for testing and debugging servers that implement the Model Context Protocol. It provides a graphical interface to inspect resources, test prompts, execute tools, and monitor server logs and notifications:

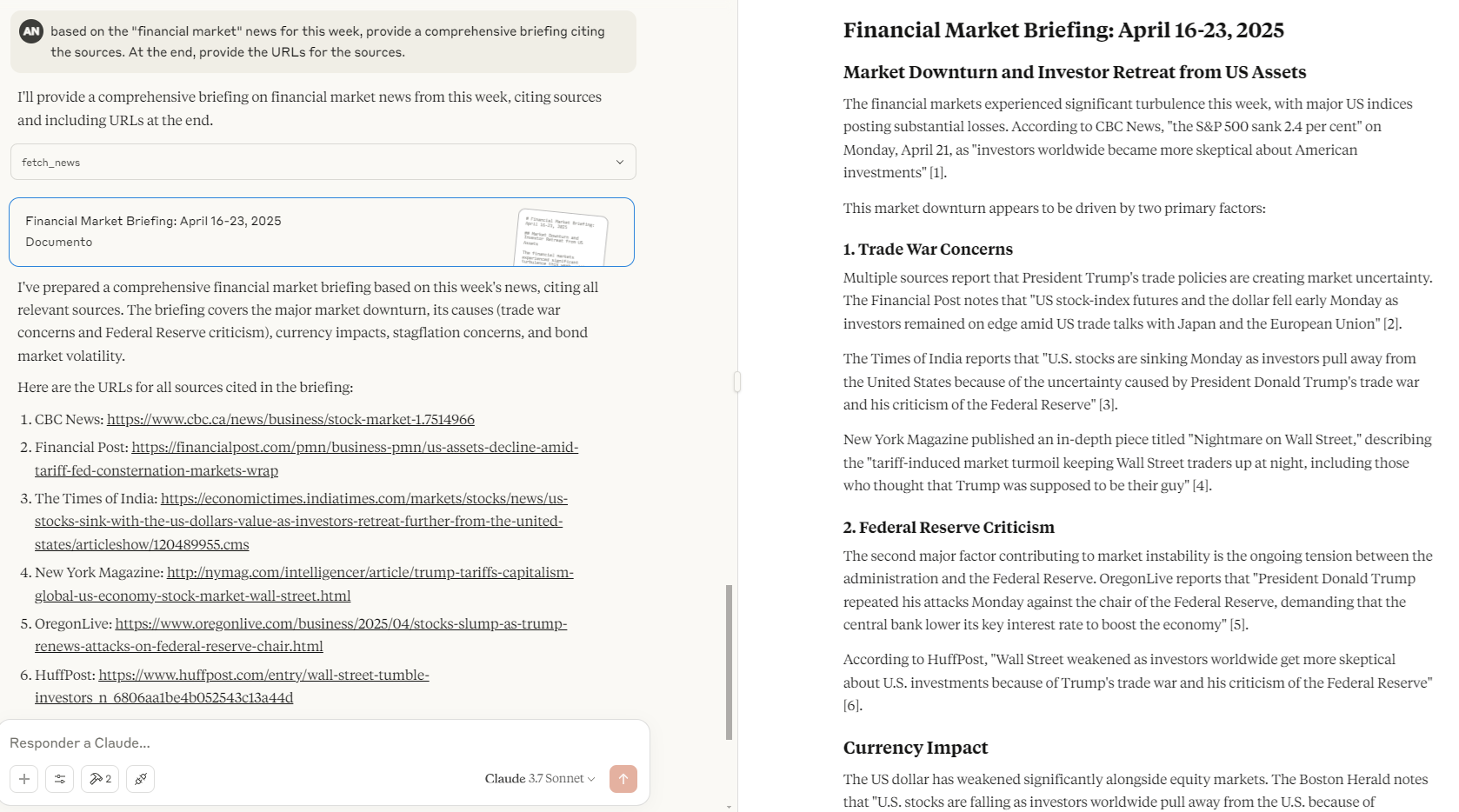

Demo in Claude Desktop

Once Claude for Desktop is installed, we need to configure it for our MCP server. To do this, open Claude for Desktop App configuration at ~/Library/Application Support/Claude/claude_desktop_config.json in a text editor.

And add the server in the mcpServers key. The MCP UI elements will only show up in Claude for Desktop if at least one server is properly configured:

{

"mcpServers": {

"news": {

"command": "uv",

"args": [

"--directory",

"C:\\ABSOLUTE\\PATH\\TO\\PARENT\\FOLDER\\news",

"run",

"server.py"

]

}

}

}

This tells Claude for Desktop:

- There’s an MCP server named "news"

- To launch it by running

uv --directory /ABSOLUTE/PATH/TO/PARENT/FOLDER/news run server.py

We can now test our server in Claude for Desktop:

References

- Microsoft. 2015. Language Server Protocol (LSP)

- Anthropic. 2024. Model Context Protocol

- Anthropic, Mahesh Murag. 2025. Building Agents with Model Context Protocol

- Hugging Face. 2025. MCP Course

- DeepLearning AI. 2025. MCP: Build Rich-Context AI Apps with Anthropic

Citation

@misc{carpintero-mcp-in-practice,

title = {Model Context Protocol (MCP) in Practice},

author = {Diego Carpintero},

month = {apr},

year = {2025},

date = {2025-04-20},

publisher = {https://tech.dcarpintero.com/},

howpublished = {\url{https://tech.diegocarpintero.com/blog/model-context-protocol-in-practice/}},

keywords = {large-language-models, agents, model-context-protocol, ai-tools, function-calling},

}